In the digital age, data is gold. Whether you are conducting market research, creating content, or analyzing products, efficiently collecting and organizing data is the key to success. List scraping is a professional web scraping technique that provides an efficient and organized way to collect structured data from websites.

Whether you are collecting product catalogs, monitoring prices on e-commerce platforms, or building a ranking content database, list scraping lays the foundation for effective data collection. This article will explore practical techniques for scraping different types of web lists, from product catalogs and infinite scrolling pages to articles, tables, and search results.

What is list crawling

List scraping is an automated process that focuses on extracting collections of similar items from the web. Unlike regular web scraping, which might target a wide variety of information on a page, list scraping focuses on structured groups of data that follow a consistent pattern, such as product lists, search results, rankings, or tabular data.

Setting up a basic crawler

To set up a basic list scraper, you need some basic components. Python has a rich library ecosystem that provides a good foundation for building efficient scrapers. Here are some commonly used Python libraries:

requests: HTTP client for retrieving web pages.

BeautifulSoup: A library for parsing HTML data using CSS selectors.

Playwright: A tool for automating more complex crawling tasks using real web browsers.

You can install these libraries using the following pip command:

$ pip install beautifulsoup4 requests playwright

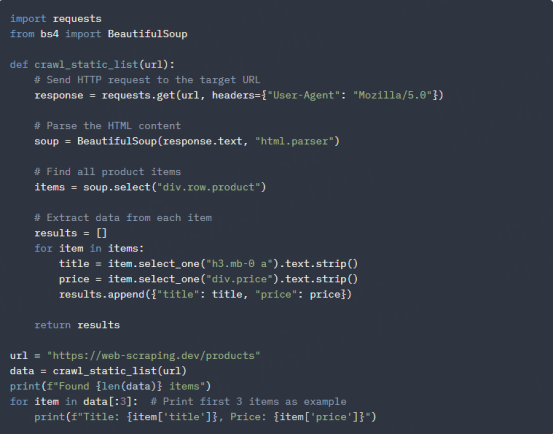

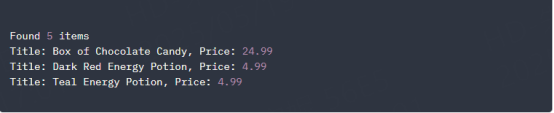

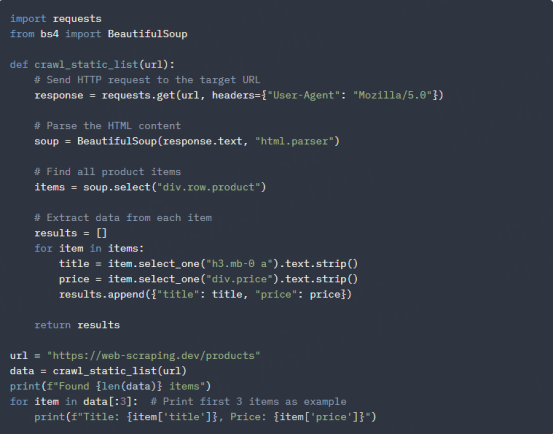

Here is a simple example of a list crawler that fetches a static list page:

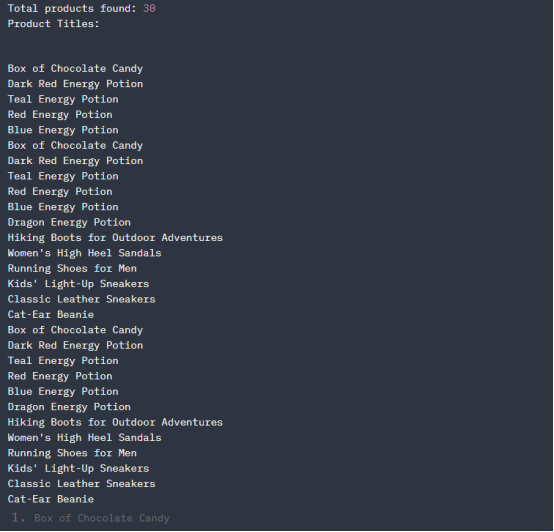

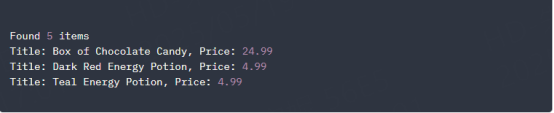

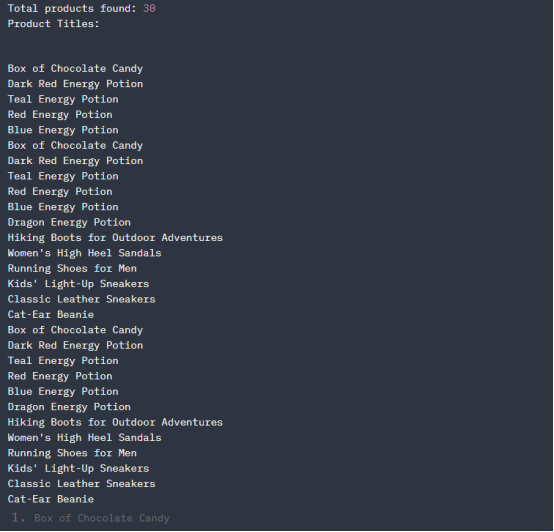

Sample output:

In the code above, we send an HTTP request to the target URL, parse the HTML content using BeautifulSoup, and extract specific data points from each list item. This approach works well for simple static lists where all content is loaded immediately. For more complex scenarios, such as paginated or dynamically loaded lists, you'll need to expand on this foundation using other techniques described in subsequent chapters.

The effectiveness of your crawler depends largely on how well you understand the structure of your target website. Taking the time to inspect the HTML code using your browser’s developer tools will help you build precise selectors to target the elements you need.

Now, let's explore how to enhance our basic crawler with more advanced features and different list scraping scenarios.

How to use the Universal Scraping API

The Universal Scraping API is a powerful tool that can help you perform list crawling efficiently. It can handle complex web page structures and extract the data you need.

1. Simulate real user browser fingerprint

The Universal Scraping API generates simulated fingerprints and dynamically adjusts parameters to meet the requirements of the target website. API requests are disguised as ordinary user browsing to avoid being identified as automated crawlers.

2. Automatic JavaScript rendering

The Universal Scraping API has an advanced JavaScript rendering engine that can execute web page JavaScript. The API can handle SPA and complex dynamic interactive pages.

3. Unlimited concurrent processing capabilities

Lunaproxy supports concurrent requests, improves data collection rate, and meets large-scale data crawling needs. The Universal Scraping API can initiate multiple tasks at the same time. Features ensure efficient data acquisition, improve work efficiency, and support data-driven decision-making.

4. Automatically crack CAPTCHA

The Universal Scraping API can automatically identify various CAPTCHAs, including text, image, and simple logic verification codes. It is automatically processed in the background without human intervention and is suitable for large-scale data collection.

5. Automatic Update Agent

Intelligently select and optimize user agents, dynamically switch IPs and configurations, simulate real user access, and reduce crawler risks.

Lunaproxy automatically updates the proxy to ensure stable and continuous crawling tasks, improving the success rate and efficiency of data crawling.

6. Data integrity verification

The Universal Scraping API quickly corrects data errors or omissions by comparing preset models and standards to ensure that the results are true and complete.

The verification mechanism improves data quality, provides a basis for data analysis and application, and ensures that users can trust the data they use.

The Universal Scraping API returns raw HTML/PNG, which can be flexibly adapted to subsequent parsing requirements. The following is an example of using the Universal Scraping API to scrape product information:

import requests

def crawl_with_universal_api(url):

api_key = "YOUR_API_KEY" # Replace with your API key

api_url = "https://api.universalwebcrawling.com/scrape"

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

payload = {

"url": url,

"output_format": "html", # Support "html" or "png"

"render_js": True,

"auto_scroll": True

}

response = requests.post(api_url, json=payload, headers=headers)

if response.status_code == 200:

return response.text # Returns raw HTML or PNG binary data

else:

print(f"API request failed, status code: {response.status_code}")

return None

url = "https://web-scraping.dev/product/1"

html_content = crawl_with_universal_api(url)

if html_content:

# Parse HTML content by yourself

print("Crawled successfully, HTML length:", len(html_content))

Crawl the paginated list

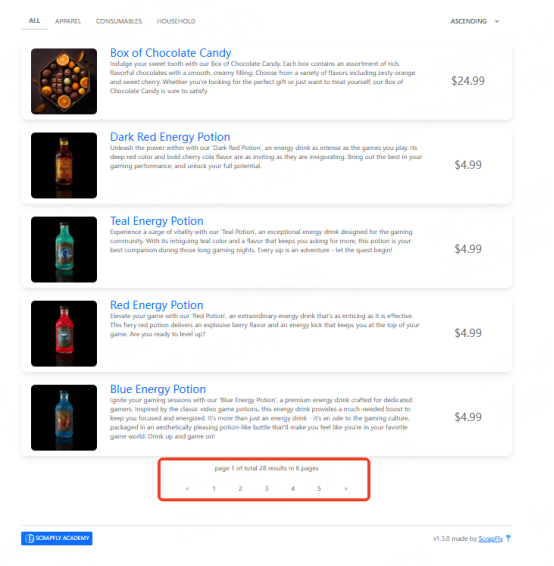

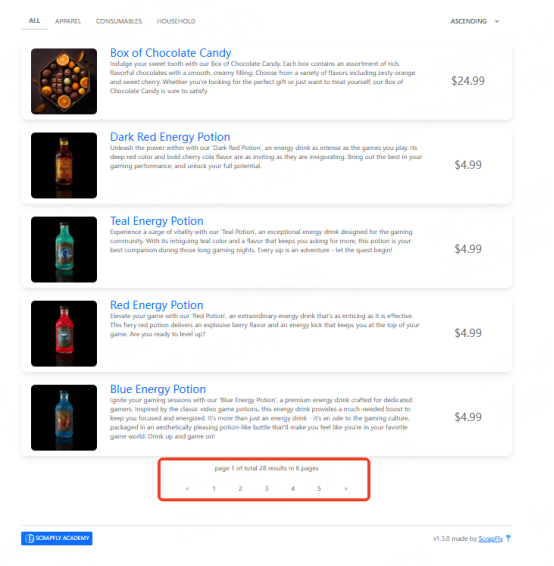

Paginated lists use numbered navigation to split data into multiple pages. This technique is common in e-commerce, search results, and data catalogs.

Here is an example of a paginated list site:

Paginated list on web-scraping.dev/products

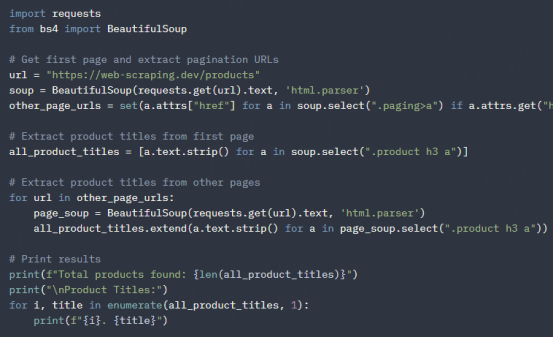

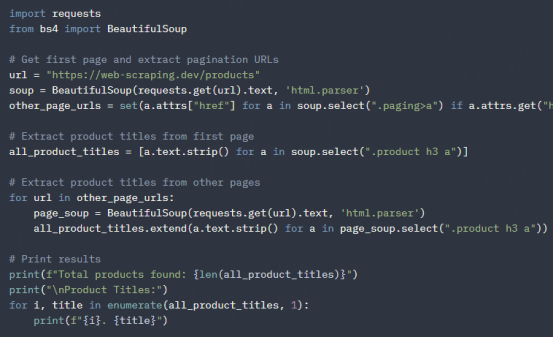

Here’s how to build a product list crawler that handles traditional pagination:

Sample output:

When scraping product listings, you will encounter several challenges:

Pagination variations: Some sites use parameters like ?page=2, while others might use path segments like /page/2/ or even completely different URL structures.

Pagination limitations: Many websites limit the maximum number of pages that can be viewed (usually 20 to 50), even if there are thousands of products. You can overcome this by using filters such as price range to access the full data set.

Layout changes: Product listing layout may vary from category to category or as part of a website update.

Missing data: Not all products contain complete information, so a strong error handling mechanism is needed.

Effective product list scraping requires adopting techniques such as request limiting, powerful selectors, and comprehensive error handling to address these challenges.

Now, let's explore how to handle dynamic lists that load content as you scroll.

Crawl infinite lists

Modern websites often implement infinite scrolling — a technique that continuously loads new content as the user scrolls down the page. These “infinite” lists present unique challenges for crawlers because the content is not divided into different pages, but is instead loaded dynamically via JavaScript.

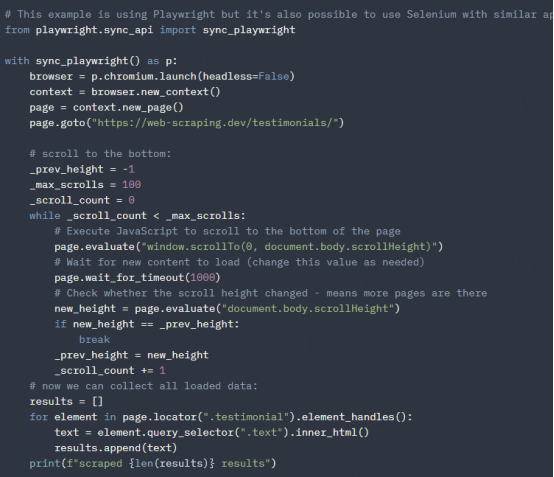

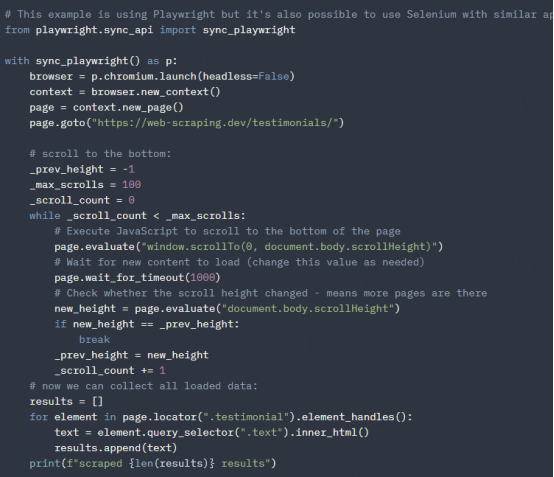

Here's how to scrape an infinite list using a headless browser in Playwright:

Sample output:

scraped 60 results

In the code above, we use Playwright to control the browser and scroll to the bottom of the page to load all the recommendations. We then collect the text of each recommendation and print the number of recommendations fetched. This approach can efficiently handle infinite lists of dynamically loaded content.

Crawl list articles

Articles that contain lists (such as "Top 10 Programming Languages" or "Five Best Travel Destinations") are another valuable source of structured data. These lists are usually embedded in the article content, organized by headings or numbered sections.

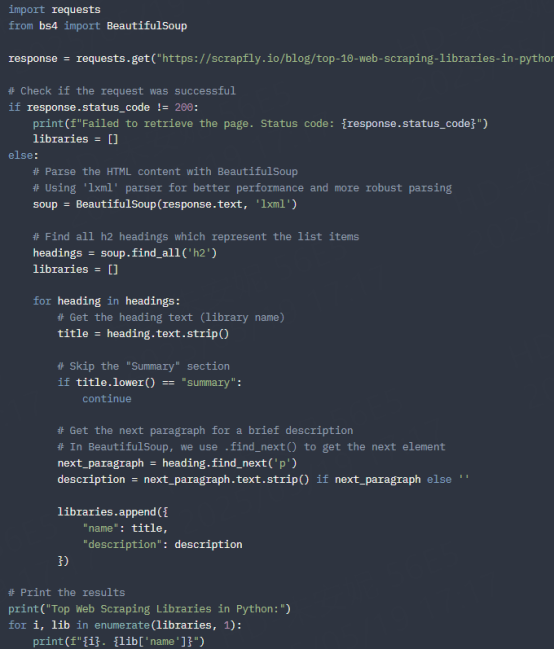

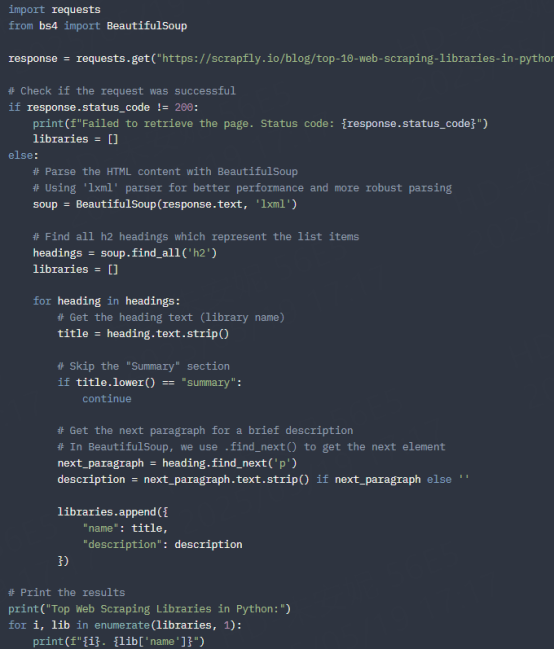

Here is an example of scraping list articles using requests and BeautifulSoup:

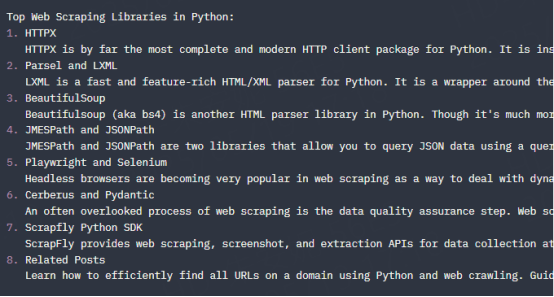

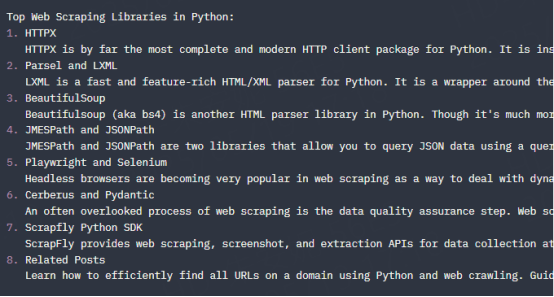

Sample output:

Extracting data from list articles requires understanding the content structure and accounting for variations in formatting. Some articles may use numbering in the title, while others rely entirely on the heading hierarchy. A robust crawler should be able to handle these variations and clean the extracted text to remove irrelevant content.

There are a few tools that can help you with list scraping:

newspaper4k: Implements functionality for parsing articles from HTML and provides various helper functions for recognizing lists.

goose3: Another library for extracting structured data from articles (including lists).

trafilatura: A powerful HTML parser with many pre-built functions to extract structured data from articles.

Conclusion

List scraping is essential for extracting structured data from the web's many list formats. From product catalogs and social feeds to nested articles and data tables, each list type requires a tailored approach.

The techniques demonstrated in this article, from HTTP requests for static content to browser automation for dynamic pages, provide powerful tools for transforming unstructured web data into valuable actionable insights.

Using the universal crawling API to crawl lists can achieve efficient and stable data acquisition. Its features such as simulating real user browser fingerprints and automatic JavaScript rendering greatly improve the success rate of crawling and the accuracy of data. Register and log in now to use it for free.