Zillow is a leading online real estate marketplace that provides a wealth of information for homebuyers, sellers, renters, and real estate professionals. With its comprehensive database of property listings, detailed agent profiles, and extensive market data, Zillow has become an indispensable tool for anyone involved in the real estate industry.

However, extracting this data for large-scale analysis can be challenging due to Zillow's advanced anti-bot systems, which are designed to detect and block automated scraping attempts. This guide will show you how to effectively scrape agent contact numbers and other valuable data from Zillow using Universal Scraping API .

What data can be captured from the zillow agent page structure?

Understanding the target page structure is the prerequisite for efficient crawling. Zillow's broker information is distributed on two types of pages:

Agent listing page

Personal details page

To scrape the data you need from these two pages, you need to know what data they include.

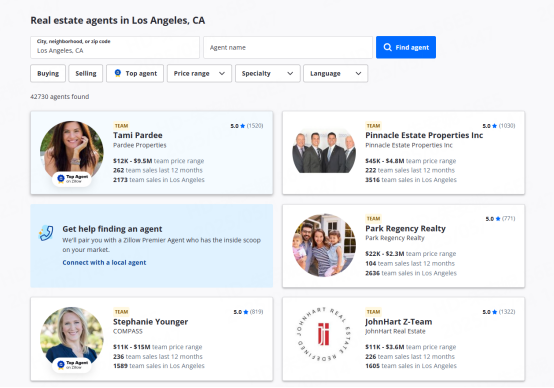

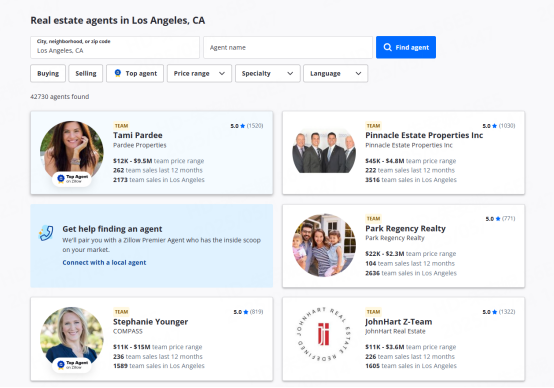

1. Agent Listing Page

On the Agent List Page, you can get the agent's name, location, rating, number of reviews, contact information (such as email and phone number), official website link, brokerage company name, etc. This information is usually displayed in a list format, with each agent occupying a row or a small area.

This page allows users to browse agents by location. For example, it shows agents in Los Angeles, California. This page includes:

This page is your starting point for collecting agent data and provides a snapshot of each agent's profile.

2. Personal Details Page

On the Personal Details Page, you can get more detailed information. This information is usually distributed in different parts of the page and needs to be located and extracted through web scraping technology.

Click on the agent name to enter this page, which includes:

This page is particularly useful for lead generation and contains direct contact information.

Why not use traditional data scraping tools?

Traditional web scraping tools, such as Puppeteer and Playwright, are often inadequate for scraping data from Zillow due to several limitations.

Zillow employs sophisticated anti-bot systems that can easily detect repetitive behaviors and patterns associated with automated scraping, such as identical browser fingerprints or unusual request frequencies. These systems can trigger CAPTCHAs, block IP addresses, or even impose temporary bans, making large-scale data extraction impractical.

Additionally, traditional tools may struggle with Zillow's dynamic content and JavaScript-rendered pages, leading to incomplete or inaccurate data capture. To overcome these challenges, specialized scraping tools like Universal Scraping API are necessary. Universal Scraping API is designed to mimic human-like interactions, minimize detectable footprints, and handle dynamic content effectively, ensuring reliable and scalable data extraction from Zillow.

How to unblock zillow with the Universal Crawling API?

1.Browser fingerprinting

Zillow monitors human-like operations, such as fixed scrolling speed and mouse movement. The Universal Crawling API automatically integrates human-like operations and interacts with the browser minimally and intelligently to avoid these problems.

2.Automatic proxy rotation

When the same IP is used to access a web page frequently, the anti-robot system will be triggered, causing the account to become abnormal. Lunaproxy will automatically assign IPs and optimize user agents.

Prepare: Set up LunarProxy

Sign up for a LunarProxy account and access API details. Set up your API endpoint and token for authentication.

The following is the complete code implementation using the universal scraping API:

Phase 1: Scrape the agent listing page URL

Goal: Get all agent personal page links in the Los Angeles area

import fetch from 'node-fetch';

import * as cheerio from 'cheerio';

import { createObjectCsvWriter } from 'csv-writer';

// Universal scraping API configuration

const UNIBERSALSCRAPING_API = 'https://api.lunaproxy.com/v1/scrape';

const API_KEY = 'your_api_key'; // Replace with the actual key

// Step 1: Send the scraping request

const scrapeDirectory = async () => {

const targetUrl = 'https://www.zillow.com/professionals/real-estate-agent-reviews/los-angeles-ca/';

const params = {

url: targetUrl,

render: 'full', // Enable headless browser rendering

proxy: JSON.stringify({

type: 'residential',

country: 'us'

}),

headers: {

'X-API-Key': API_KEY

}

};

try {

const response = await fetch(`${UNIBERSALSCRAPING_API}?${new URLSearchParams(params)}`);

const html = await response.text();

// Step 2: Parse HTML

const $ = cheerio.load(html);

const profileUrls = [];

$('a[href^="/profile/"]').each((i, el) => {

const path = $(el).attr('href');

profileUrls.push(`https://www.zillow.com${path}`);

});

// Step 3: Save the results

const csvWriter = createObjectCsvWriter({

path: 'zillow_profiles.csv',

header: [{ id: 'url', title: 'Profile URL' }]

});

await csvWriter.writeRecords(profileUrls.map(url => ({ url })));

console.log('Successfully saved broker link');

} catch (error) {

console.error('Failed to crawl:', error);

}

};

scrapeDirectory();

Phase 2: Extract contact information and transaction data

Goal: Extract phone number, name, and historical transaction records from personal pages

import fs from 'fs';

import { parse } from 'csv-parse';

import { createObjectCsvWriter } from 'csv-writer';

// Configure output structure

const outputWriter = createObjectCsvWriter({

path: 'agent_details.csv',

header: [

{ id: 'name', title: 'Name' },

{ id: 'phone', title: 'Phone' },

{ id: 'sales', title: '12M Sales' }

]

});

// Batch processing URLs

const processProfiles = async () => {

const urls = fs.readFileSync('zillow_profiles.csv', 'utf-8')

.split('\n')

.slice(1) // Skip the header line

.map(line => line.trim());

for (const url of urls) {

const params = {

url: url,

js_instructions: JSON.stringify([

{action: 'waitForSelector', selector: '.agent-detail-section'} // Wait for key elements to load

]),

extract_rules: JSON.stringify({

name: '.agent-name::text',

phone: 'meta[itemprop="telephone"]::attr(content)',

sales: 'div.sales-stats::text => match(/\\d+/)[0]' // Use custom parsing rules

})

};

try {

const response = await fetch(`${UNIBERSALSCRAPING_API}?${new URLSearchParams(params)}`);

const data = await response.json();

await outputWriter.writeRecords([{

name: data.extracted.name || 'N/A',

phone: data.extracted.phone || 'N/A',

sales: data.extracted.sales || 0

}]);

} catch (error) {

console.error(`Processing failed: ${url}`, error); }

}

};

processProfiles();

Conclusion

By leveraging the Universal Scraping API, scraping Zillow data becomes a breeze. By leveraging LunarProxy's advanced features, real estate professionals can effectively extract valuable broker data, including contact numbers, reviews, and sales statistics, without the risk of detection or interception.

Ready to go? Sign up for a free trial now and test it out in your next scraping project.

FAQ

1. Is scraping data from Zillow legal?

Scraping public data is generally allowed, but before using the Universal Scraping API, it is recommended to carefully read Zillow's terms of use and consult with legal professionals.

2. How is the accuracy of Zillow data scraped by the Universal Scraping API guaranteed?

Since the Zillow page structure may change, the Universal Scraping API needs to be tested and adjusted regularly to ensure the accuracy of data extraction. In addition, data quality can be improved through data validation and cleaning.

3. How to start using the Universal Scraping API?

Register a LunaProxy account, configure the scraping task through the dashboard, and quickly scrape the required data.